Quantized Magic Wand on Nicla

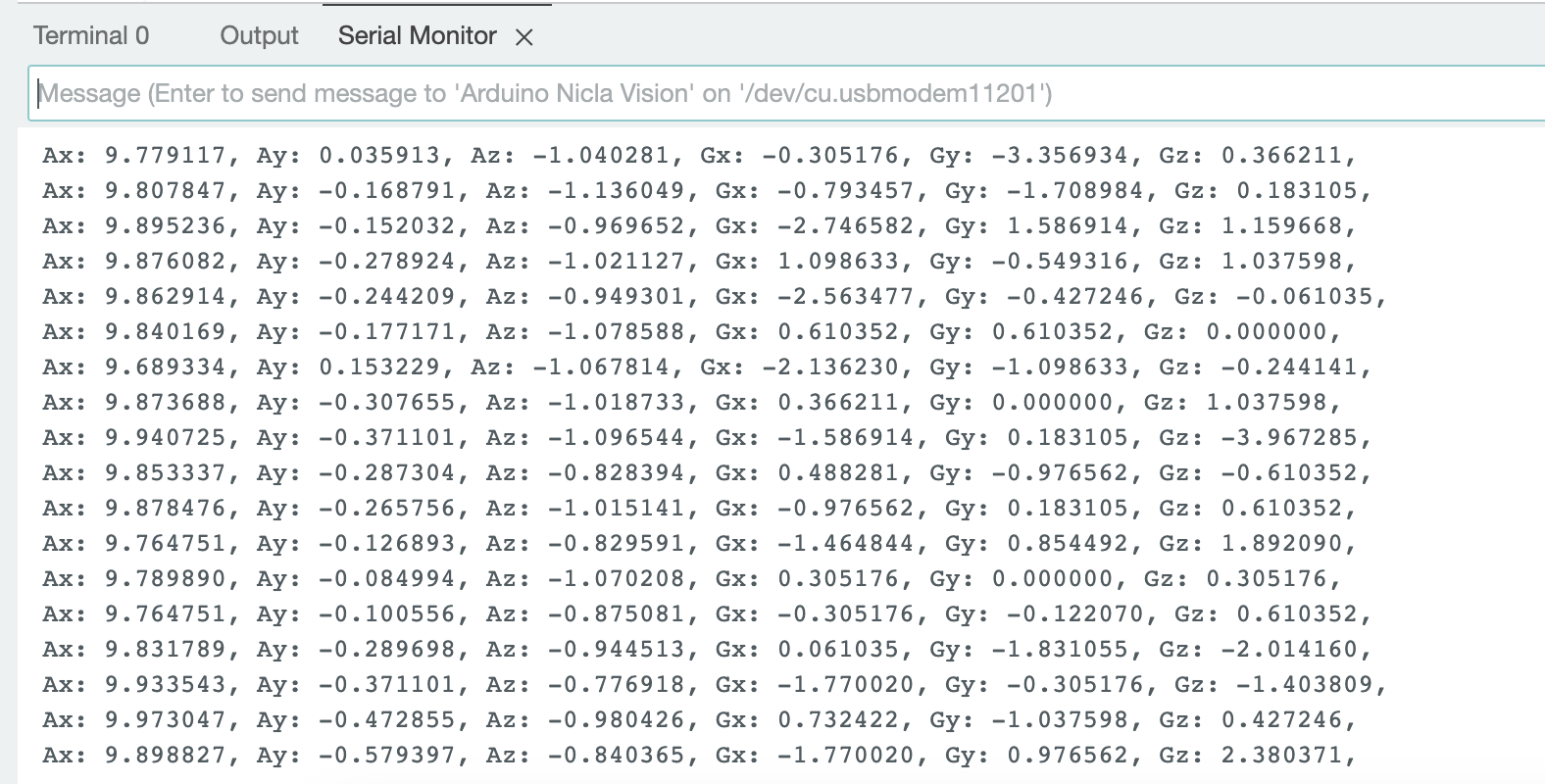

I worked with the TensorFlow Lite ecosystem using IMU data from the Arduino Nicla Vision. My main objective was to build a TensorFlow model, optimize it using TensorFlow Lite's quantization methods, apply pruning techniques, and convert it for compatibility with TensorFlow Lite Micro. This involved constructing convolutional neural networks (CNNs) and analyzing their size post-training. I also examined the effects of quantization and pruning on the model. The assignment was unique because it utilized IMU data, which has a time series nature. To begin, I set up my Arduino Nicla Vision, installed the necessary libraries for the IMU sensor, and established Bluetooth communication. I constructed a wand dataset by recording gestures. The data was then converted into images, which served as input for my CNN model.

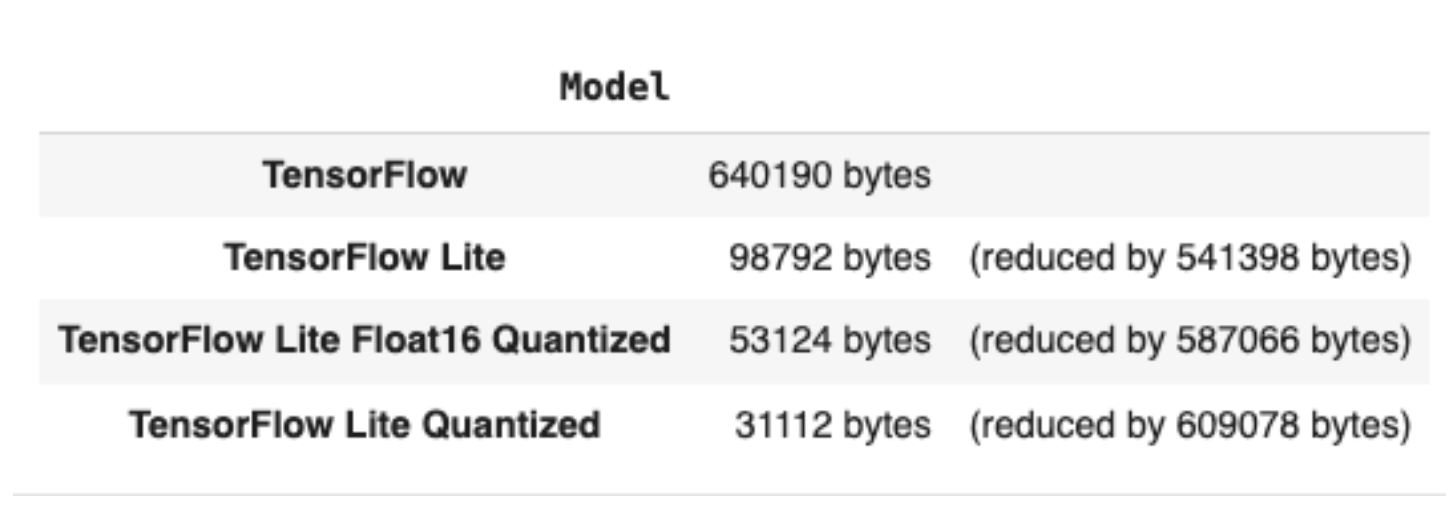

First, I made sure that the IMU readings were working correctly by observing changes as I moved the device. Next, I trained a CNN using the dataset. I created the CNN architecture, applied Float16 quantization to the model weights, and applied pruning to specific layers. I analyzed the accuracy and size of the models at each stage, comparing the effects of quantization and pruning. For instance, my quantized model was about 20.6 times smaller than the original TensorFlow model, and my Float16 quantized model was about 1.86 times smaller than the TensorFlow Lite model. I also found that the quantized model retained most of the accuracy of the original model. I observed that the baseline CNN model achieved an accuracy of 89.55% on the test dataset, while my CNN model had an accuracy of 96.36%. Additionally, the pruned model achieved an accuracy of 93.18% on the test dataset. In the end, I chose the TensorFlow Lite Quantized model as my preferred model due to its size and retention of accuracy.